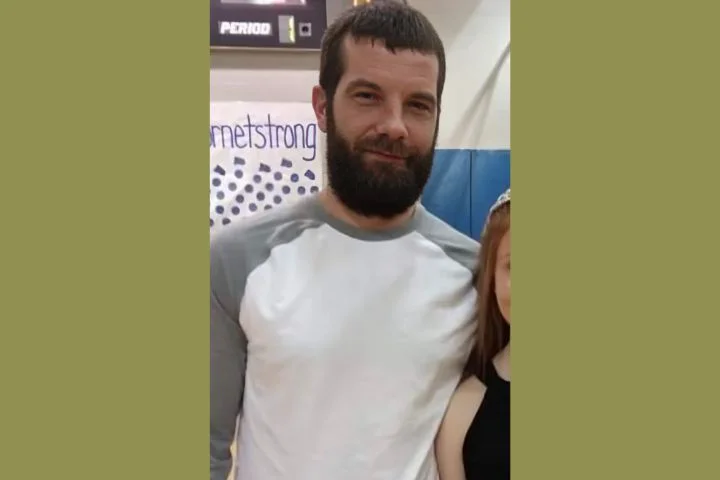

The Unsolved Disappearance of Destin Henderson

39-year-old Destin Henderson was last seen at his apartment complex in Houston, Texas on October 24, 2023….

39-year-old Destin Henderson was last seen at his apartment complex in Houston, Texas on October 24, 2023….

A Florida man is facing two first-degree murd*er charges after police say he stabbed his wife and…

Nearly two months after James Wray, a husband and father of three disappeared, his body was discovered…

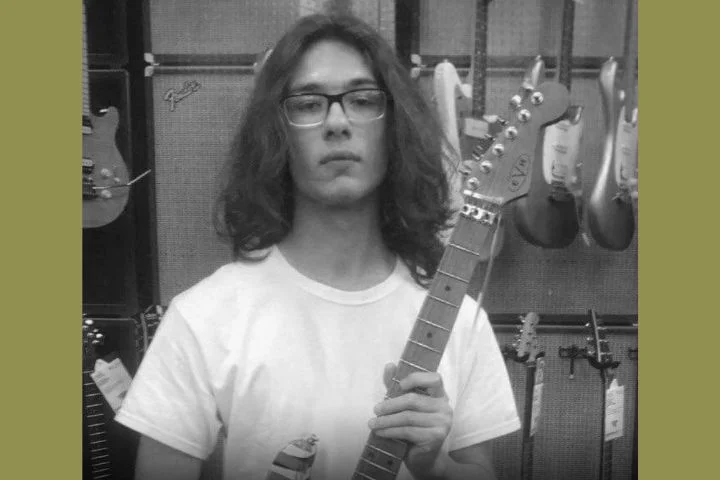

26-year-old Colin Arey was last seen in Brewer, Maine on January 25, 2017. He was not reported…

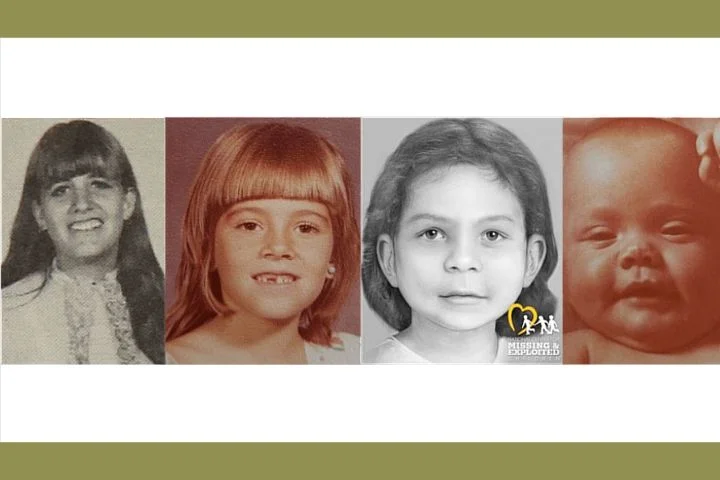

The Bear Brook murders, also known as the Allenstown Four, involve the tragic d*aths of four female…

Erika Antoinette Hill, a 15-year-old American girl, was m*rdered in Fitchburg, Wisconsin, in February 2007. Her body,…

The remains of a 2-year-old boy who went missing eight months ago were found, according to French…

16-year-old Jarrett Brooks was last seen in Joseph City, Arizona on July 4, 2023. He vanished early…

20-year-old Ryan Shtuka was last seen in Sun Peaks, British Columbia on February 17, 2018. He vanished…

A Bartow High School student was ki*lled in a hit-and-run crash on Wednesday, officials said. The Florida…